Super Powers for Researchers

Tue Nov 06 2018As Isaac Newton once said, “If I have seen further it is by standing on the shoulders of Giants.” That is, we build our knowledge based on the theories and findings of researchers both dead and alive. This synthesis of ideas has given us truly remarkable discoveries — vaccines that have saved millions of lives, the ability to instantly communicate with virtually anyone around the world, and a better grasp on the history of the world and humanity.

The ability to identify the right shoulders to stand on, however, is becoming increasingly difficult. Indeed, in 2017, there were 3,046,494 research articles published. There is an abundance of scientific information being shared each year and that’s fantastic but it also means researchers need a better way to digest the literature.

Ideas are linked amongst papers as citations. Citations are used for different purposes, but primarily they are thought of as being a good thing to receive. Thus, a highly cited paper is often considered a highly-useful paper. But this generalization ignores the fact that not all citations are created equal. Some might be simply mentioning a study, some might be supporting it, and some might be refuting it. Today, that information is largely buried and we simply count the number of citations as a proxy of quality.

Introducing scite: how, not how many.

What if there were a way to identify which papers tested the claim and of those which supported it or refuted it? This would make it easier to understand how a finding has been received by the scientific community at large and would de-emphasize the impact of a paper, emphasizing the reliability of it. We’re introducing this capability with scite, a new tool that allows researchers to see how a research paper has been cited, not simply how many times it has been cited.

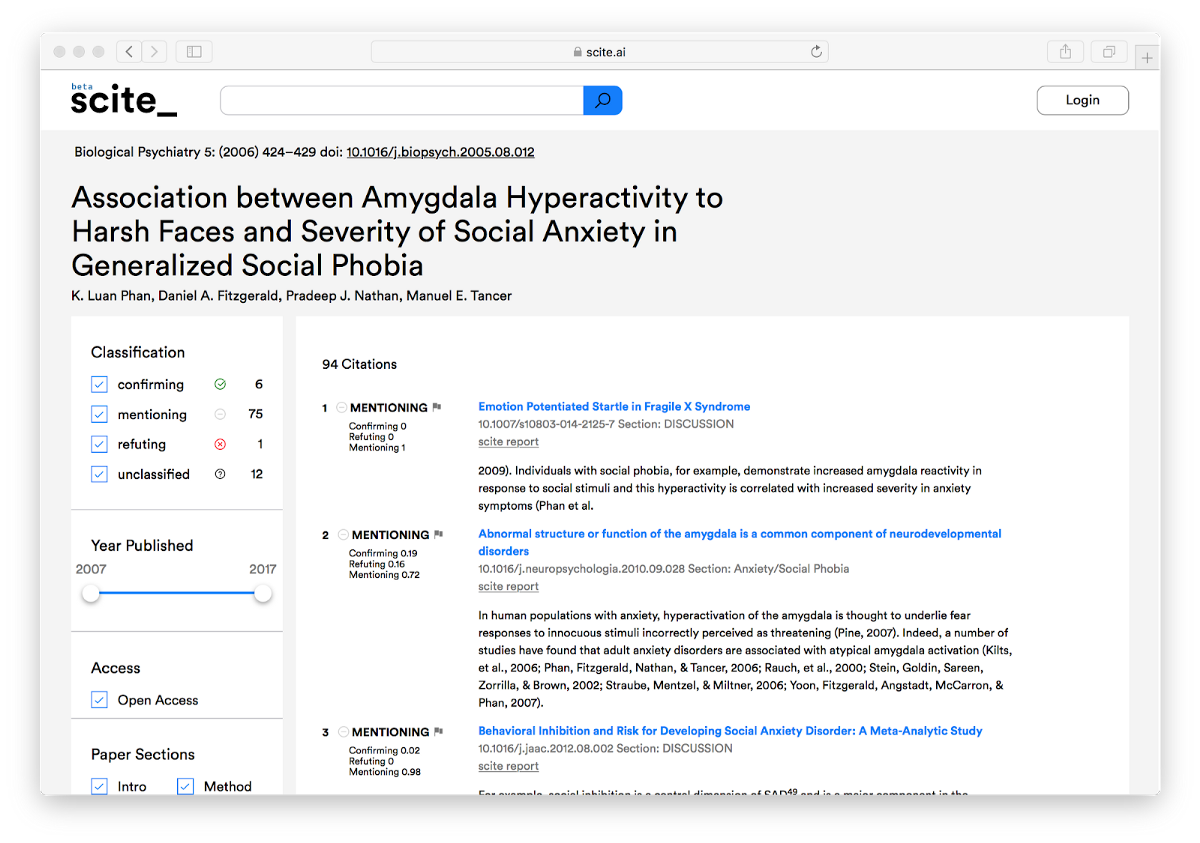

To show the utility of scite, consider the publication, “Association between Amygdala Hyperactivity to Harsh Faces and Severity of Social Anxiety in Generalized Social Phobia” by Phan et. al. It is highly cited, having received 332 citations and, as measured by the impact factor, is published in the most prestigious journal in psychiatry. By these measures this paper is an absolute success and perhaps it is.

Yet such measurements miss so much information. Specifically, what do those 332 citations say about the paper? Do they test it? Do they agree or disagree with it? Or are they simply referring to it in passing? You can read all 332 of those papers to answer this question but that is a massive undertaking that will take days, if not weeks, or even months to do.

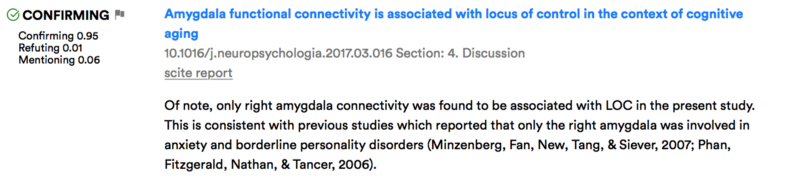

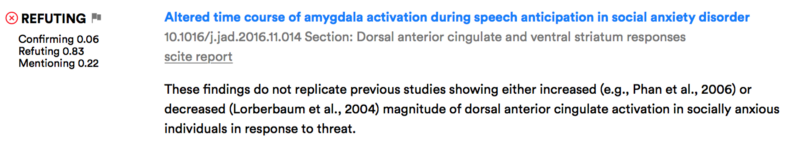

With scite, we can now see that of those 332 citations there are at least 6 citations that support the original study and 1 that refutes it (note, we currently only have access to 94 citations total).

If one wants to know if this paper has been confirmed you only have to read the 7 papers citing the original. Or said another way, 98% of citations simply mention the study, only 2% are necessary to understand it’s validity. The ability to digest large amounts of research in this manner means a massive increase in researcher efficiency and consequently research.

You can now see how a paper has been cited and, most importantly, find which papers to read in-depth. This has consequences not just for digesting research better but for how research is done. By explicitly identifying citations as supporting or refuting, scite provides a feedback mechanism, encouraging better research. We think the ability to do this automatically is akin to giving researchers superpowers.

Come fly with us!

Sign up here for early access: https://www.scite.ai/